Command Palette

Search for a command to run...

A low-barrier Trial of Open-AutoGLM: an Intelligent Agent Experience Combining Screen Understanding and Automated Execution; Spatial-SSRL-81k: Building a self-supervised Improvement Path for Spatial awareness.

While "Doubao Mobile" was still being discussed as a trend,Zhipu AI announced that it has open-sourced its mobile intelligent assistant framework, Open-AutoGLM.It enables multimodal understanding and automated operation of screen content.

Unlike traditional mobile automation tools,The Phone Agent uses a visual language model to achieve deep semantic understanding of screen content, and combines intelligent planning capabilities to automatically generate and execute operation processes.The system controls the device through ADB (Android Debug Bridge). Users only need to describe their needs in natural language, such as "open Xiaohongshu to search for food", and the Phone Agent can automatically parse the intent, understand the current interface, plan the next action, and complete the entire process.

In terms of security and controllability, the system is designed with a sensitive operation confirmation mechanism and supports user takeover in scenarios requiring manual intervention, such as login, payment, or verification codes, ensuring a safe and reliable user experience. Additionally, the Phone Agent has remote ADB debugging capabilities, supporting device connections via WiFi or mobile networks, providing developers and advanced users with flexible remote control and real-time debugging support.

at present,Open-AutoGLM, implemented based on this framework, has been applied to over 50 mainstream Chinese applications, including WeChat, Taobao, and Xiaohongshu.Capable of handling a variety of daily tasks, from social interaction and e-commerce shopping to content browsing, it is gradually evolving into an intelligent assistant covering all aspects of users' lives, including clothing, food, housing, and transportation.

The HyperAI website now features "Open-AutoGLM: A Smart Assistant for Mobile Devices," so come and try it out!

Online use:https://go.hyper.ai/QwvOU

A quick overview of hyper.ai's official website updates from December 8th to December 12th:

* High-quality public datasets: 10

* High-quality tutorial selection: 5

* This week's recommended papers: 5

* Community article interpretation: 5 articles

* Popular encyclopedia entries: 5

Top conferences with January deadlines: 11

Visit the official website:hyper.ai

Selected public datasets

1. Envision Multi-Stage Event Visual Generation Dataset

Envision is a multi-image text pair dataset released by the Shanghai Artificial Intelligence Laboratory, designed to test a model's ability to understand causal relationships and generate multi-stage narratives in real-world events. The dataset contains 1,000 event sequences and 4,000 four-stage text prompts, covering six major fields: natural sciences and humanities/history. The event materials are sourced from textbooks and online resources, selected by experts, and generated and refined by GPT-4o to form narrative prompts with clear causal chains and progressively unfolding stages.

Direct use:https://go.hyper.ai/xD4j6

2. DetectiumFire Multimodal Fire Understanding Dataset

DetectiumFire, a dataset released by Tulane University in collaboration with Aalto University, is designed for flame detection, visual reasoning, and multimodal generation tasks. It has been included in the NeurIPS 2025 Datasets and Benchmarks Track, aiming to provide a unified training and evaluation resource for fire scenes in computer vision and vision-language models. The dataset contains over 145,000 high-quality, real-world fire images and 25,000 fire-related videos.

Direct use:https://go.hyper.ai/7Z92Z

3. Care-PD Parkinson's 3D Gait Assessment Dataset

CARE-PD, released by the University of Toronto in collaboration with the Vector Institute, KITE Research Institute–UHN, and other institutions, is currently the largest publicly available 3D gait mesh dataset for Parkinson's disease. It has been selected for NeurIPS 2025 Datasets and Benchmarks and aims to provide a high-quality data foundation for clinical score prediction, Parkinson's gait representation learning, and unified cross-institutional analysis. The dataset contains gait records from 362 subjects across 9 independent cohorts from 8 clinical institutions. All gait videos and motion capture data have been uniformly processed and converted into anonymized SMPL 3D human gait meshes.

Direct use:https://go.hyper.ai/CH7Oi

4. PolyMath Multilingual Mathematical Inference Benchmark Dataset

PolyMath is a multilingual mathematical reasoning evaluation dataset released by Alibaba's Qianwen team in conjunction with Shanghai Jiao Tong University. It has been selected for NeurIPS 2025 Datasets and Benchmarks and aims to systematically evaluate the mathematical understanding, reasoning depth and cross-linguistic consistency performance of large language models under multilingual conditions.

Direct use:https://go.hyper.ai/VM5XK

5. VOccl3D 3D Human Occlusion Video Dataset

VOccl3D is a large-scale synthetic dataset released by the University of California, focusing on 3D human understanding in complex occluded scenes. It aims to provide a more realistic benchmark for human pose estimation, reconstruction, and multimodal perception tasks. The dataset contains over 250,000 images and approximately 400 video sequences, constructed from background scenes, human actions, and diverse textures.

Direct use:https://go.hyper.ai/vBFc2

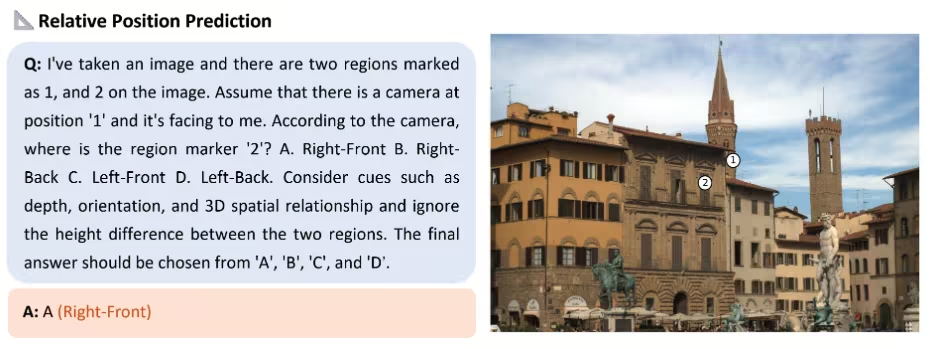

6. Spatial-SSRL-81k Spatial Aware Self-Supervised Dataset

Spatial-SSRL-81k is a self-supervised vision-language dataset for spatial understanding and spatial reasoning, released by the Shanghai Artificial Intelligence Laboratory in collaboration with Shanghai Jiao Tong University, the Chinese University of Hong Kong, and other institutions. It aims to provide large models with spatial awareness capabilities without manual annotation, thereby improving their reasoning and generalization performance in multimodal scenarios.

Direct use:https://go.hyper.ai/AfHSW

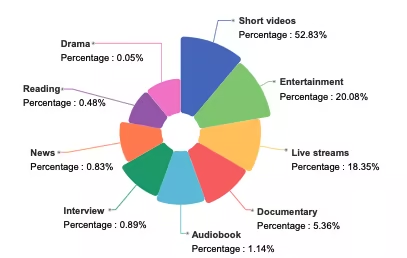

7. WenetSpeech-Chuan (Sichuan-Chongqing Dialect Speech Dataset)

WenetSpeech-Chuan is a large-scale Sichuan and Chongqing dialect speech dataset released by Northwestern Polytechnical University in conjunction with Hillbeike, China Telecom Artificial Intelligence Research Institute and other institutions. The dataset covers 9 real-world scenarios, with short videos accounting for 52.831 TP3T. The rest include entertainment, live streaming, audiobooks, documentaries, interviews, news, reading and dramas, presenting a highly diverse and realistic speech distribution.

Direct use:https://go.hyper.ai/dFlE2

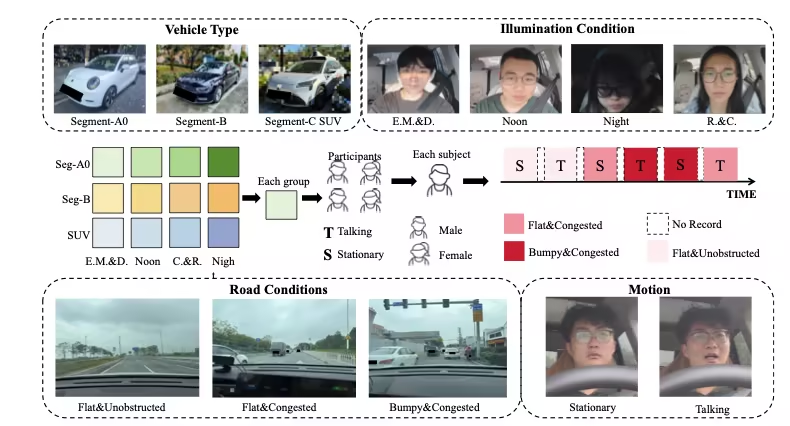

8. PhysDriver Physiological Test Dataset

PhysDrive is the first large-scale multimodal dataset for in-vehicle non-contact physiological measurement in a real driving environment, released by institutions such as Hong Kong University of Science and Technology (Guangzhou), Hong Kong University of Science and Technology, and Tsinghua University. It has been selected for NeurIPS 2025 Datasets and Benchmarks and aims to support the research and evaluation of driver state monitoring, smart cockpit systems, and multimodal physiological perception methods.

Direct use:https://go.hyper.ai/4qz9T

9. MMSVGBench Multimodal Vector Graphics Generation Benchmark Dataset

MMSVG-Bench is a comprehensive benchmark designed for multimodal SVG generation tasks, jointly released by Fudan University and StepFun. It has been selected for NeurIPS 2025 Datasets and Benchmarks and aims to fill the gap in the current field of vector graphics generation, which lacks a unified, open, and standardized test set.

Direct use:https://go.hyper.ai/WiZCR

10. PolypSense3D Polyp Size-Aware Dataset

PolypSense3D is a multi-source benchmark dataset designed specifically for depth-sensing polyp size measurement tasks, released by Hangzhou Normal University in collaboration with the Technical University of Denmark, Hohai University, and other institutions. It has been selected for NeurIPS 2025 and aims to provide high-quality training and evaluation resources for polyp detection, depth estimation, size measurement, and simulation-to-real transfer learning.

Direct use:https://go.hyper.ai/SZnu6

Selected Public Tutorials

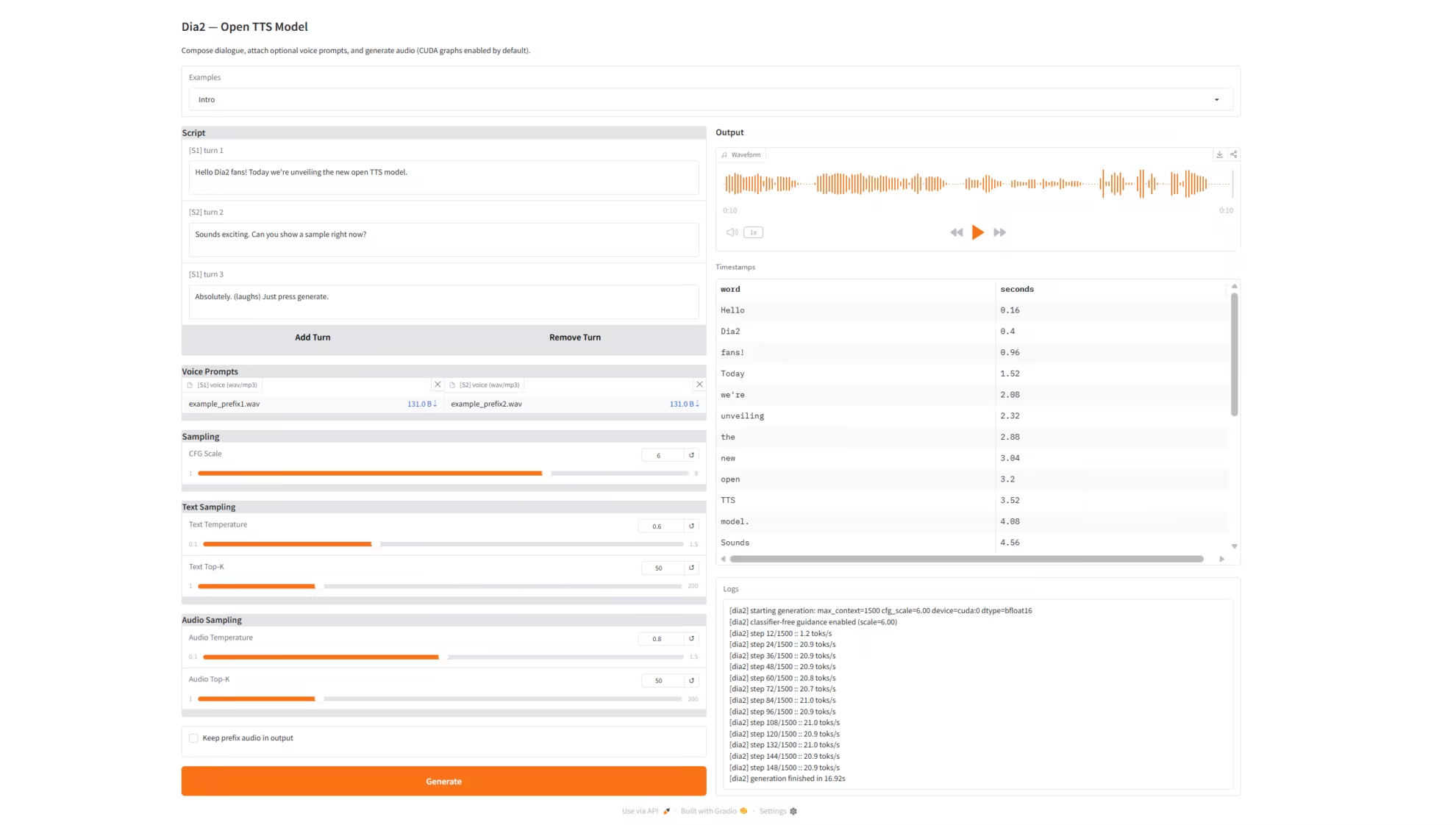

1. Dia2-TTS: Real-time speech synthesis service

Dia2-TTS is a real-time speech synthesis service built on the Dia2 large-scale speech generation model (Dia2-2B) released by the nari-labs team. It supports multi-turn dialogue script input, dual-role voice prompts (Prefix Voice), and multi-parameter controllable sampling. It provides a complete web-based interactive interface through Grado for high-quality conversational speech synthesis. The model can directly input consecutive multi-turn dialogue scripts to generate natural, coherent, and consistent high-quality speech, suitable for applications such as virtual customer service, voice assistants, AI dubbing, and short drama generation.

Run online:https://go.hyper.ai/Qbfni

2. Open-AutoGLM: Smart Assistant for Mobile Devices

Open-AutoGLM is a mobile intelligent assistant framework released by Zhipu AI, built upon AutoGLM. This framework can understand mobile screen content in a multimodal way and help users complete tasks through automated operations. Unlike traditional mobile automation tools, Phone Agent uses a visual language model for screen perception, combined with intelligent planning capabilities to automatically generate and execute operation processes.

Run online:https://go.hyper.ai/QwvOU

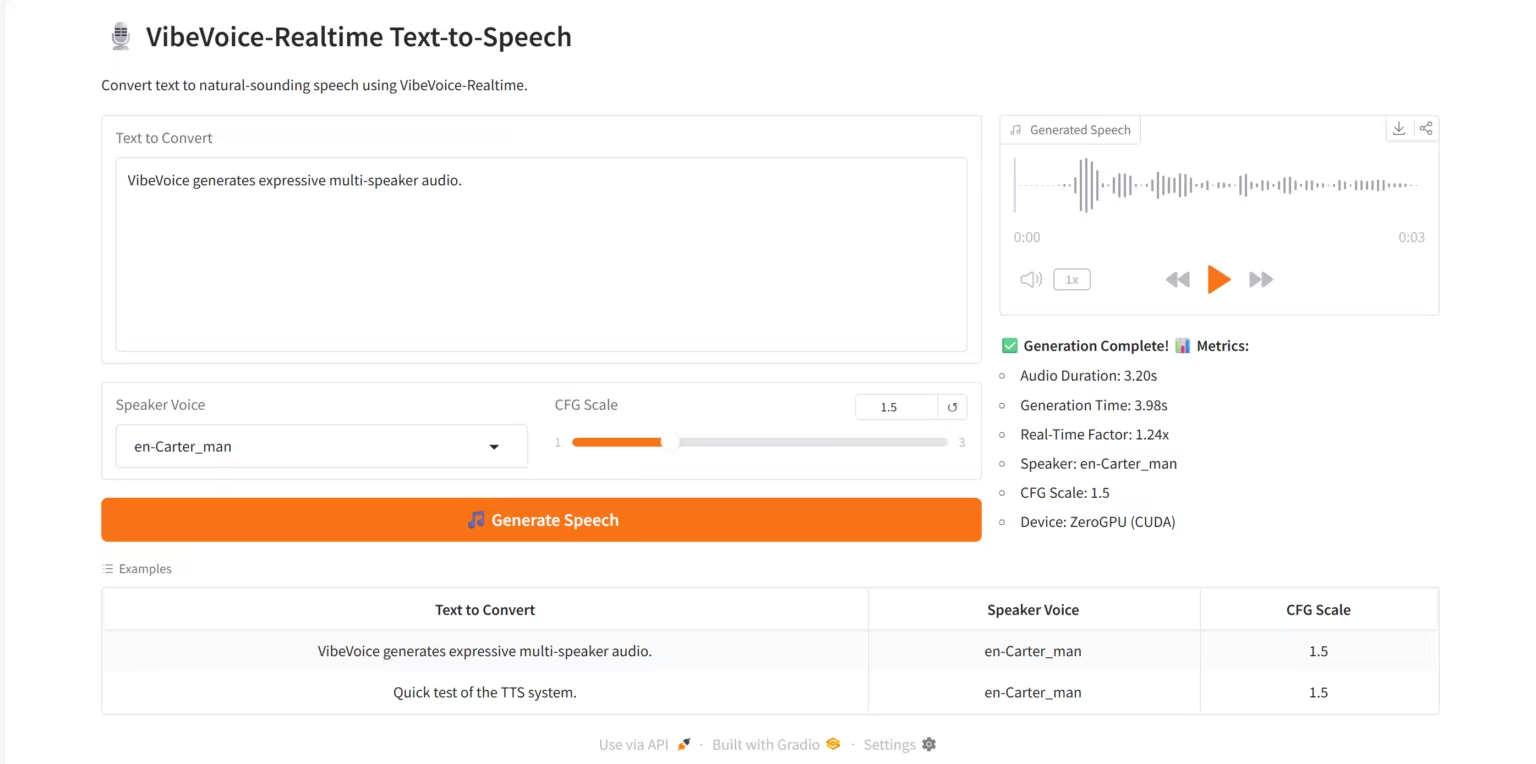

3. VibeVoice-Realtime TTS: Real-time speech synthesis service

VibeVoice-Realtime TTS is a high-quality real-time text-to-speech (TTS) system built upon the VibeVoice-Realtime-0.5B streaming speech synthesis model released by the Microsoft Research team. The system supports multi-speaker speech generation, low-latency real-time inference, and interactive visuals on the Grado web platform.

Run online:https://go.hyper.ai/RviLs

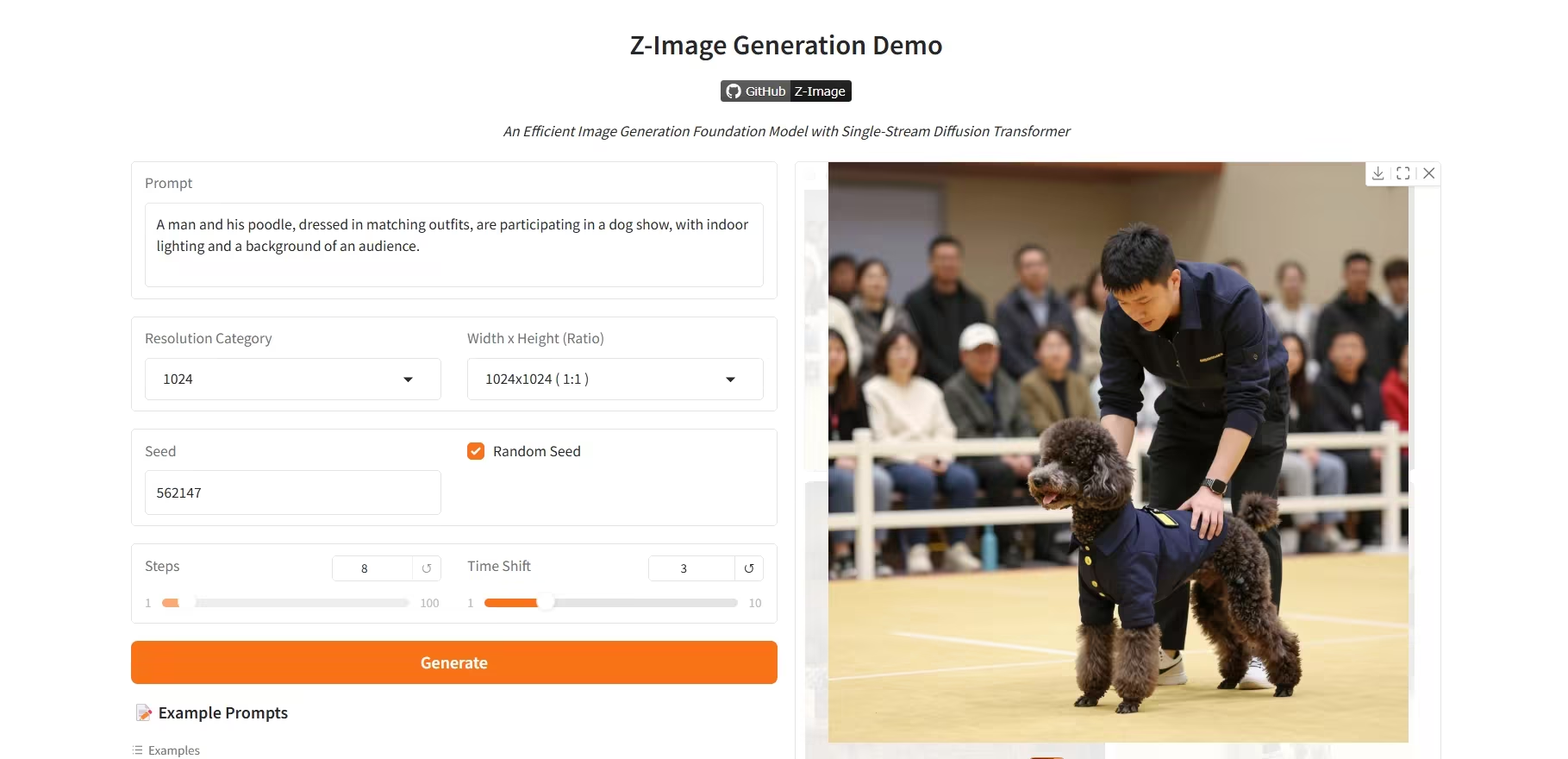

4. Z-Image-Turbo: A high-efficiency 6B-parameter image generation model

Z-Image-Turbo is a new generation of high-efficiency image generation model released by Alibaba's Tongyi Qianwen team. With only 6 bytes of parameters, this model achieves performance comparable to flagship closed-source models with over 20 bytes of parameters, and is particularly adept at generating high-fidelity, photorealistic portraits.

Run online:https://go.hyper.ai/R8BJF

5. Ovis-Image: A high-quality image generation model

Ovis-Image is a high-quality text-to-image (T2I) generation model system, built upon the Ovis-Image-7B high-fidelity text-to-image generation model released by the AIDC-AI team. This system employs a multi-scale Transformer encoder and an autoregressive generative architecture, demonstrating exceptional performance in high-resolution image generation, detail representation, and multi-style adaptation.

Run online:https://go.hyper.ai/NoaDw

This week's paper recommendation

1. Wan-Move: Motion-controllable Video Generation via Latent Trajectory Guidance

This paper proposes Wan-Move, a simple and scalable framework that introduces motion control capabilities to video generation models. Existing motion-controllable methods often suffer from coarse-grained control and limited scalability, resulting in generated results that fail to meet practical application requirements. To bridge this gap, Wan-Move achieves high-precision, high-quality motion control. Its core idea is to directly endow the original conditional features with motion perception capabilities to guide video generation.

Paper link:https://go.hyper.ai/h3uaG

2. Visionary: The World Model Carrier Built on WebGPU-Powered Gaussian Splatting Platform

This paper proposes Visionary, an open-source, natively web-oriented real-time rendering platform that supports real-time rendering of various Gaussian raster and mesh types. Based on a high-performance WebGPU rendering engine and combined with an ONNX inference mechanism executed per frame, the platform achieves dynamic neural processing capabilities while maintaining a lightweight design and a "click-to-run" browser experience.

Paper link:https://go.hyper.ai/NaBv3

3. Native Parallel Reasoner: Reasoning in Parallelism via Self-Distilled Reinforcement Learning

This paper proposes the Native Parallel Reasoner (NPR), a teacher-free framework that enables Large Language Models (LLMs) to autonomously evolve true parallel reasoning capabilities. In eight reasoning benchmark tests, NPR trained on the Qwen3-4B model achieves a performance improvement of up to 24.51 TP3T and a maximum inference speed increase of 4.6x.

Paper link:https://go.hyper.ai/KWiZQ

4. TwinFlow: Realizing One-step Generation on Large Models with Self-adversarial Flows

This paper proposes TwinFlow, a generative model training framework. This method does not rely on a fixed pre-trained teacher model and avoids the use of standard adversarial networks during training, making it particularly suitable for building large-scale, high-efficiency generative models. In text-to-image generation tasks, this framework achieves a GenEval score of 0.83 with only one-order function evaluation (1-NFE), significantly outperforming strong baseline models such as SANA-Sprint (a framework based on GAN loss) and RCGM (a framework based on consistency mechanisms).

Paper link:https://go.hyper.ai/l1nUp

5. Beyond Real: Imaginary Extension of Rotary Position Embeddings for Long-Context LLMs

Rotational Positional Encoding (RoPE), which involves rotating the query and key vectors in the complex plane, has become a standard method for encoding sequence order in Large Language Models (LLMs). However, existing standard implementations only use the real part of the complex dot product to compute attention scores, ignoring the imaginary part, which contains important phase information. This can lead to the loss of crucial relative relationship details when modeling long-range dependencies. This paper proposes an extension method that reintroduces the previously discarded imaginary part information. This method fully utilizes the complete complex representation to construct a two-component attention score.

Paper link:https://go.hyper.ai/iGTw6

More AI frontier papers:https://go.hyper.ai/iSYSZ

Community article interpretation

1. A $20 billion gamble! xAI bets Musk's massive stakes on OpenAI, with its future commercial viability remaining the biggest question mark.

In 2025, xAI gained unprecedented capital momentum under Musk's strong impetus, but its commercialization remained highly dependent on the X and Tesla ecosystems, with cash flow and regulatory pressure rising simultaneously. Grok's "weak alignment" approach became increasingly dangerous against the backdrop of increasingly stringent global regulations, and its deep ties with X also weakened its independent growth potential. Faced with cost imbalances, limited business models, and regulatory friction, xAI's future still oscillates between the narratives of giants, policy changes, and Musk's personal will.

View the full report:https://go.hyper.ai/NmLi4

2. Full Agenda | Shanghai Innovation Lab, TileAI, Huawei, Advanced Compiler Lab, and AI9Stars gather in Shanghai for an in-depth analysis of the entire process of operator optimization.

The 8th Meet AI Compiler technical salon will be held on December 27th at Shanghai Innovation Academy. This session features experts from Shanghai Innovation Academy, TileAI Community, Huawei HiSilicon, Advanced Compiler Lab, and AI9Stars Community. They will share insights across the entire technology chain, from software stack design and operator development to performance optimization. Topics will include cross-ecosystem interoperability of TVM, optimization of PyPTO's fusion operators, low-latency systems with TileRT, key optimization techniques for Triton across multiple architectures, and operator optimization for AutoTriton, presenting a complete technical path from theory to implementation.

View the full report:https://go.hyper.ai/xpwkk

3. Online Tutorial | SAM 3 Achieves Hinted Concept Segmentation with 2x Performance Improvement, Processing 100 Detection Objects in 30 Milliseconds

While the SAM and SAM 2 models have made significant progress in image segmentation, they still haven't been able to automatically find and segment all instances of a concept within the input content. To fill this gap, Meta released the latest iteration, SAM 3. This new version not only significantly surpasses the performance of its predecessors in cueable visual segmentation (PVS), but also sets a new standard for cueable concept segmentation (PCS) tasks.

View the full report:https://go.hyper.ai/YfmLc

4. A Carnegie interdisciplinary team successfully captured traces of life from 3.3 billion years ago using a random forest model based on 406 samples.

The Carnegie Institution for Science in the United States, in collaboration with multiple universities around the world, has formed an interdisciplinary team to refine a "technology fusion" solution of pyrolysis gas chromatography-mass spectrometry and supervised machine learning, which can capture ancient traces of life in chaotic molecular fragments.

View the full report:https://go.hyper.ai/CNPMQ

5. Event Recap | Peking University, Tsinghua University, Zilliz, and MoonBit Discuss Open Source, Covering Video Generation, Visual Understanding, Vector Databases, and AI Native Programming Languages

HyperAI, as a co-organizing community of COSCon'25, hosted the "Industry-Research Open Source Collaboration Forum" on December 7th. This article is a summary of the key points from the in-depth presentations by four speakers. We will also share the full presentations in video format later, so stay tuned!

View the full report:https://go.hyper.ai/XrCEl

Popular Encyclopedia Articles

1. Bidirectional Long Short-Term Memory (Bi-LSTM)

2. Ground Truth

3. Layout control (Layout-to-Image)

4. Embodied Navigation

5. Frames Per Second (FPS)

Here are hundreds of AI-related terms compiled to help you understand "artificial intelligence" here:

January deadline for the top conference

One-stop tracking of top AI academic conferences:https://go.hyper.ai/event

The above is all the content of this week’s editor’s selection. If you have resources that you want to include on the hyper.ai official website, you are also welcome to leave a message or submit an article to tell us!

See you next week!

About HyperAI

HyperAI (hyper.ai) is the leading artificial intelligence and high-performance computing community in China.We are committed to becoming the infrastructure in the field of data science in China and providing rich and high-quality public resources for domestic developers. So far, we have:

* Provide domestic accelerated download nodes for 1800+ public datasets

* Includes 600+ classic and popular online tutorials

* Interpretation of 200+ AI4Science paper cases

* Supports 600+ related terms search

* Hosting the first complete Apache TVM Chinese documentation in China

Visit the official website to start your learning journey: