Command Palette

Search for a command to run...

One-click Deployment of MMed-Llama-3-8B

Tutorial Introduction

该教程仅需使用 RTX 4090 即可启动。

MMed-Llama-3-8B is a large-scale multilingual medical language model developed in 2024 by a research team from Shanghai Jiao Tong University and the Shanghai Artificial Intelligence Laboratory. This model, with only 8 parameters, demonstrates performance comparable to large-scale models such as GPT-4, particularly excelling in multilingual medical applications. Related research papers are available. Towards building multilingual language model for medicineIt has been published in Nature Communications.

The model is constructed based onLarge-Scale Multilingual Medical Corpus MMedCThe corpus contains about 25.5 billion medical-related tokens in six major languages: English, Chinese, Japanese, French, Russian, and Spanish. The construction of the MMedC corpus aims to provide autoregressive domain adaptation capabilities for general large-scale language models.

MMed-Llama-3-8B model in MMedBench BenchmarkThe performance on the CNN model surpasses existing open source models and is even comparable to GPT-4 in some aspects. In addition, the model also shows strong capabilities in multilingual medical question answering tasks, which shows its effectiveness in dealing with non-English medical problems.

This tutorial is a one-click deployment Demo tutorial for the model. You can directly clone it and open the API address to use it for inference.

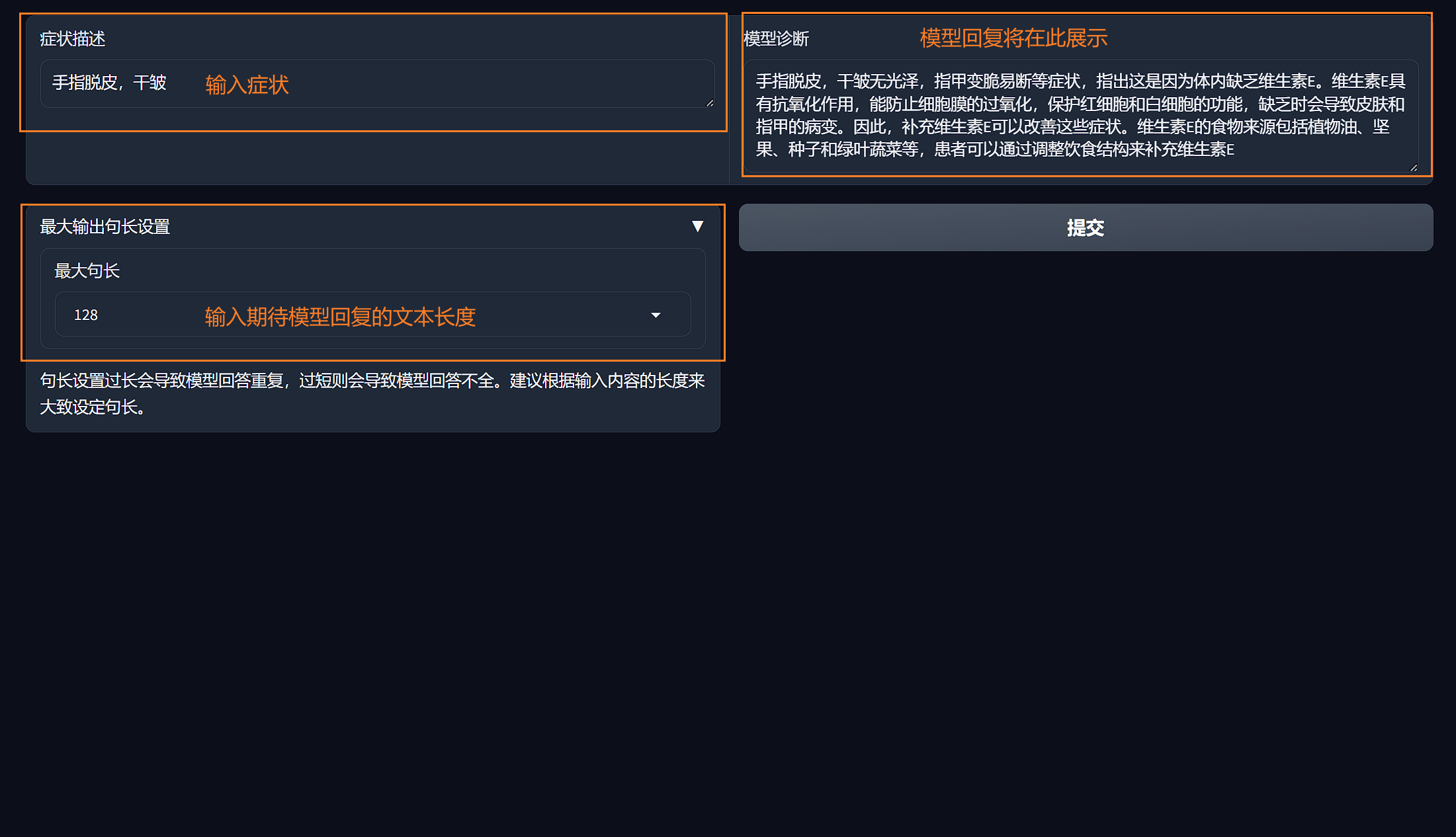

Effect examples

|

Run steps

1. 在该项目右上角点击「克隆」,随后依次点击「下一步」即可完成:基本信息> 选择算力> 审核等步骤。最后点击「继续执行」即可在个人容器内开启本项目。

2. 等待容器资源分配完成后,可直接使用平台提供的 API 地址进行操作页面的访问(需要提前完成实名认证,此步无需打开工作空间)

|

3. 与模型进行对话

|

Discussion and Exchange

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [Tutorial Exchange] to join the group to discuss various technical issues and share application effects↓

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.