Command Palette

Search for a command to run...

ScaleNet

ScaleNet was jointly proposed in October 2025 by a research team from Beijing Institute of Technology, Huawei Noah's Ark Lab, and City University of Hong Kong, among other institutions. The related research results were published in a paper. ScaleNet: Scaling up Pretrained Neural Networks with Incremental Parameters .

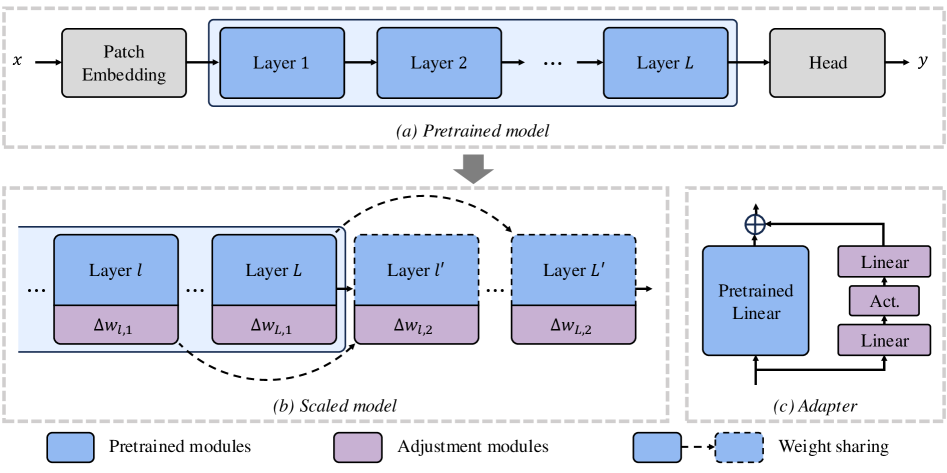

ScaleNet is an efficient method for scaling ViT models. Unlike traditional training from scratch, this paradigm achieves rapid model scaling on existing pre-trained models with minimal parameter increases, providing a cost-effective solution for ViT scaling. Specifically, ScaleNet scales models by inserting additional layers into pre-trained ViTs, utilizing layer-wise weight sharing to maintain parameter efficiency. Extensive experiments on the ImageNet-1K dataset demonstrate that ScaleNet significantly outperforms training scaled models from scratch, achieving a 7.421 TP3T accuracy improvement on a 2 × depth-scaled DeiT-Base model in only one-third of the training epochs.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.